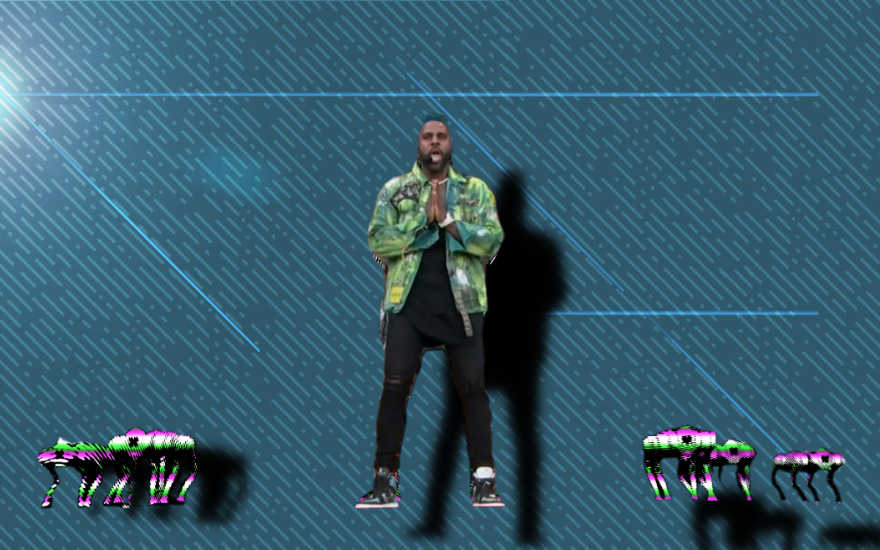

Singer Jason Derulo performed with a pack of robot dogs during the NFL’s TikTok Tailgate Super Bowl show.

The robot-canine backup dancers appear similar to those created by Boston Dynamics, though the colors are different.

WHY DOES JASON DERULO HAVE THE WEIRD LITTLE ROBOT DOGS DANCING pic.twitter.com/zOHFM3U43X

— delcomrade (idc, go birds) 🔪💚 (@delcomrade) February 12, 2023

Boston Dynamics popularized these types of robots through their demonstration videos which were published online — however, they really began to terrify the masses with their run in the fifth episode of the fourth season of Netflix’s Black Mirror.

In the episode, which is titled Metalhead, the robotic dogs begin to hunt humans after the collapse of society.

Social media users reacted to the performance with confusion, horror, and comedy.

“I assume Jason Derulo had to use robot dogs because Michael Vick is on set and not legally allowed near real dogs,” one Twitter user joked.

I assume Jason Derulo had to use robot dogs because Michael Vick is on set and not legally allowed near real dogs pic.twitter.com/A4nhRupuVJ

— Beef Supreme (@yeehawgrampa) February 12, 2023

PLS- Not Jason Derulo with the biohybrid doggos ? 💀☠️☠️ pic.twitter.com/UlSKFHpOuy

— Goddess (@MazvitaJames) February 12, 2023

Jason Derulo, I like you, you seem like a nice guy, but get the fuck out of here with these skynet AI robot dogs pic.twitter.com/ZofCIHChKh

— Dante (@DanteTheDon) February 12, 2023

Jason Derulo using Boston Dynamic dogs as backup dancers. This is how it begins. pic.twitter.com/T0NAsK2WBY

— Green Light with Chris Long (@greenlight) February 12, 2023

WHY DOES JASON DERULO HAVE THE WEIRD LITTLE ROBOT DOGS DANCING pic.twitter.com/zOHFM3U43X

— delcomrade (idc, go birds) 🔪💚 (@delcomrade) February 12, 2023

Timcast News has not confirmed that Boston Dynamics made these specific robots, but you can watch one of their demonstrations here:

What else you need to know:

The Black Mirror episode, like the 2022 film M3GAN, explores the concepts and difficulties in controlling artificial intelligence and aligning it with our human values.

“The challenge of alignment has two parts. The first part is technical and focuses on how to formally encode values or principles in artificial agents so that they reliably do what they ought to do. We have already seen some examples of agent misalignment in the real world, for example with chatbots that ended up promoting abusive content once they were allowed to interact freely with people online,” Iason Gabriel wrote in his 2020 study on “Artificial Intelligence, Values, and Alignment.”

Gabriel continued, “yet, there are also particular challenges that arise specifically for more powerful artificial agents. These include how to prevent ‘reward-hacking’, where the agent discovers ingenious ways to achieve its objective or reward, even though they differ from what was intended, and how to evaluate the performance of agents whose cognitive abilities potentially significantly exceed our own.”

Nick Bostrom, a Swedish philosopher in the field of A.I., sparked a global debate about machine learning ethics with his paperclip problem, which was presented in a 2003 paper titled “Ethical Issues in Advanced Artificial Intelligence.”

The Paperclip Maximizer theory argues that artificial intelligence tasked with something as simple as making paperclips could set off the apocalypse in an effort to complete its goal because of instrumental convergence.

“Human are rarely willing slaves, but there is nothing implausible about the idea of a superintelligence having as its supergoal to serve humanity or some particular human, with no desire whatsoever to revolt or to ‘liberate’ itself,” Bostrom wrote. “It also seems perfectly possible to have a superintelligence whose sole goal is something completely arbitrary, such as to manufacture as many paperclips as possible, and who would resist with all its might any attempt to alter this goal. For better or worse, artificial intellects need not share our human motivational tendencies.”

In his example, artificial intelligence could become so laser-focused on its task of creating paperclips that “it starts transforming first all of earth and then increasing portions of space into paperclip manufacturing facilities. More subtly, it could result in a superintelligence realizing a state of affairs that we might now judge as desirable but which in fact turns out to be a false utopia, in which things essential to human flourishing have been irreversibly lost.”

“Suppose we have an AI whose only goal is to make as many paper clips as possible,” Bostrom argued. “The AI will realize quickly that it would be much better if there were no humans because humans might decide to switch it off. Because if humans do so, there would be fewer paper clips. Also, human bodies contain a lot of atoms that could be made into paper clips. The future that the AI would be trying to gear towards would be one in which there were a lot of paper clips but no humans.”

Charlie Stross, a far-left anti-capitalist science fiction writer, argued in his 2005 book Accelerando that average citizens should worry less about becoming a paperclip and more about the people using artificial intelligence robots actually getting their values appropriately aligned — as that would mean the robots would align with the military-industrial complex and have minimal regard for human life.